Recently, I came across a situation where I had to convert an input text file to avro format. I dig a lot and found some good solution to it.

Here in this article, I am going to share about convert text file to avro file format easily.

But before moving ahead with the methods to convert text file to avro file format, let me tell you some advantages of using Avro file format.

Advantages of Avro file format

Here are some of the common benefits of using Avro file format in Hadoop-

1. Smallest Size

2. Compress block at a time; splittable

3. Object structure maintained

4. Supports reading old data with new schema

You can check more about Hadoop file format here. In this post, I have shared which Hadoop file format gives the smallest size and other benefits of it.

Now as you know the benefits of using Avro file format, let me tell you the method to convert Text File to Avro file in Hadoop.

The only issue I found with Avro file is, when you will try to read and write from it, you will need a schema to do so and provides relatively slower serialization.

Convert Text file to Avro File: Easy Way!

Let’s suppose you are working in Pig and you have an input text file. For example, let’s have the below text file.

[box type=”shadow”] Tez – Hindi for “speed” provides a general-purpose, highly customizable framework that creates simplifies data processing tasks across both small scale (low-latency) and large-scale (high throughput) workloads in Hadoop. It generalizes the MapReduce paradigm to a more powerful framework. By providing the ability to execute a complex DAG (directed acyclic graph) of tasks for a single job so that projects in the Apache Hadoop ecosystem such as Apache Hive, Apache Pig, and Cascading can meet requirements for human-interactive response times and extreme throughput at petabyte scale (clearly MapReduce has been a key driver in achieving this).We are going to read in a truck driver statistics files. We are going to compute the sum of hours and miles logged driven by a truck driver for a year. Once we have the sum of hours and miles logged, we will extend the script to translate a driver id field into the name of the drivers by joining two different files.

[/box]

I have stored this in a text file called “sample pig text file.txt”

Now I convert this text file to Avro file in Pig.

Step 1: Store this text file in a relation. Let’s say textfile

textfile = load '/user/cloudera/pig/pig sample text file.txt' using PigStorage('.') as (datafile);

Step2: Now as we have the data in the relation textfile. It’s time to store it as an Avro file.

To store the text file into Avro file, use this library

org.apache.pig.piggybank.storage.avro.AvroStorage()

And the query will be-

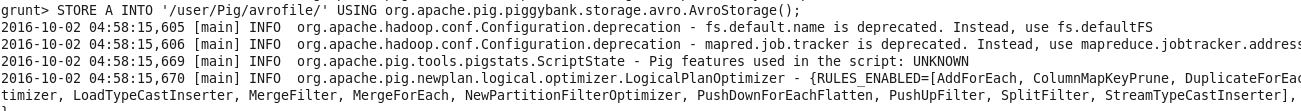

STORE A INTO '/user/Pig/avrofile' USING org.apache.pig.piggybank.storage.avro.AvroStorage();

If you are getting error like-

[box type=”shadow”] ERROR 1070: Could not resolve org.apache.pig.piggybank.storage.avro.AvroStorage using imports: [, java.lang., org.apache.pig.builtin., org.apache.pig.impl.builtin.] [/box]That means you have not registered the piggybank.jar file. The solution is very simple. Just download and register piggybank.jar file in the grunt shell.

You can download the piggybank.jar file using this link.

That’s all. You will have the avro file now. This was the simplest way to convert a text file into avro file format.

Do try and let me know for any error in comment.

Hello,

i am doing a project and i am using apache flink and kafka and hence zookeeper. i am really new at this so i havent touched Apache pig at all. is it possible to convert a txt file in avro using flink or kafka.

i rather not learn a new apache platform just for converting data.