Now as we know almost everything about HDFS in this HDFS tutorial and it’s time to work with different file formats. This Input file formats in Hadoop is the 7th chapter in HDFS Tutorial Series.

There are mainly 7 file formats supported by Hadoop. We will see each one in detail here-

Input File Formats in Hadoop

2. JSON Records

3. Avro Files

4. Sequence Files

5. RC Files

6. ORC Files

7. Parquet Files

Text/CSV Files

Text and CSV files are quite common and frequently Hadoop developers and data scientists received text and CSV files to work upon.

However CSV files do not support block compression, thus compressing a CSV file in Hadoop often comes at a significant read performance cost.

That is why if you are working with text or CSV files, don’t include header ion the file else it will give you null value while computing the data. (In Hive Tutorial I will let you know how to deal with it).

Each line in these files should have a record and so there is no metadata stored in these files.

You must know how the file was written in order to make use of it.

JSON Records

JSON records contain JSON files where each line is its own JSON datum. In the case of JSON files, metadata is stored and the file is also splittable but again it also doesn’t support block compression.

The only issue is there is not much support in Hadoop for JSON file but thanks to the third party tools which helps a lot. Just do the experiment and get your work done.

AVRO Files

Avro is quickly becoming the top choice for the developers due to its multiple benefits. Avro stores metadata with the data itself and allows specification of an independent schema for reading the file.

You can rename, add, delete and change the data types of fields by defining a new independent schema. Also, Avro files are splittable, support block compression and enjoy broad, relatively mature, tool support within the Hadoop ecosystem.

Sequence File

Sequence file stores data in binary format and has a similar structure to CSV file with some differences. It also doesn’t store metadata and so only schema evolution option is appending new fields but it supports block compression.

Due to complexity, sequence files are mainly used in flight data as an intermediate storage.

RC File (Record Columnar Files)

RC file was the first columnar file in Hadoop and has significant compression and query performance benefits.

But it doesn’t support schema evaluation and if you want to add anything to RC file you will have to rewrite the file. Also, it is a slower process.

ORC File (Optimized RC Files)

ORC is the compressed version of RC file and supports all the benefits of RC file with some enhancements like ORC files compress better than RC files, enabling faster queries.

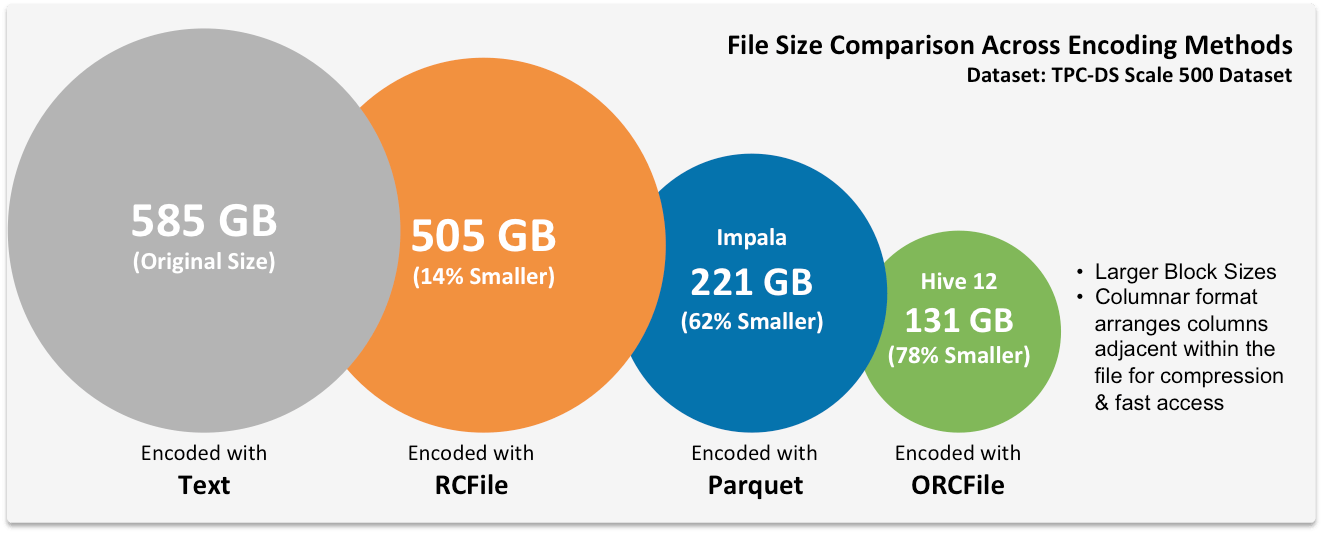

But it doesn’t support schema evolution. Some benchmarks indicate that ORC files compress to be the smallest of all file formats in Hadoop.

Parquet Files

Parquet file is another columnar file given by Hadoop founder Doug Cutting during his Trevni project. Like another Columnar file RC & ORC, Parquet also enjoys the features like compression and query performance benefits but is generally slower to write than non-columnar file formats.

In Parquet format, new columns can be added at the end of the structure. This format was mainly optimized for Cloudera Impala but aggressively getting popularity in other ecosystems as well.

One thing you should note here is, if you are working with Parquet file in Hive then you should take some precautions.

Note: In Hive Parquet column names should be lowercase. If it is of mixed cases then hive will not read it and will give you null value.

However, Impala can handle mixed cases.

Here is one research was done on the size of Hadoop file formats in terms of size. Just check for your reference-

So far I have explained different file formats available in Hadoop but suppose you are a developer and client has given you some data and has asked you to work from scratch. Then the most common issue that developer’s face is which file format to use as it’s the file format which allows you flexibility and features.

Here I am going to talk about how to select best file format in Hadoop

When anyone starts working on the files, below are the three factors they consider–

• Partial read performance- how fast can you read individual columns within a file

• Full read performance- how fast can you read every data element in a file

Now as we know the characteristics of all the file formats and you know your requirements so it will be easier for you to select the best one.

Columnar formats are better when you need read performance but you will have to compromise and exactly opposite when it comes to non-columnar file formats. CSV files are good when considering write performance but due to lack of compression and column-orientation are slow for reads.

Here are some of the key factors to consider while selecting the best file format–

Source: LinkedIn

Source: LinkedIn

Based on these factors, I am summarizing the fact that which file formats to use in which cases here.

• If query performance against the data is most important- ORC (HortonWorks/Hive) or Parquet (Cloudera/Impala)

• if your schema is going to change over time- Avro

• if you are going to extract data from Hadoop to bulk load into a database-CSV

So these were the different Hadoop file formats and how to select the File format in Hadoop.

Previous Chapter: HDFS File ProcessingCHAPTER 8: HDFS Commands